Main Menu

Welcome

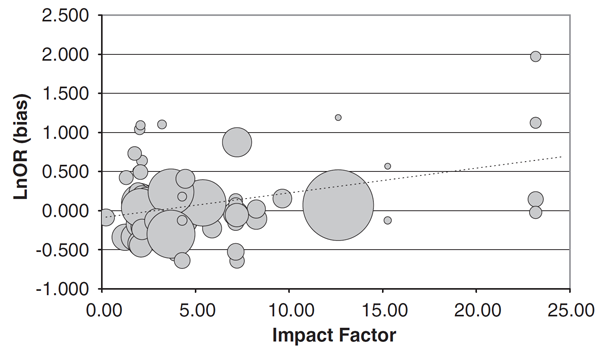

Now, I've just been sent a paper (subscription required) which provides evidence that the reliability of some research papers correlates negatively with journal IF. In other words, the higher the journal's IF in which the paper was published, the less reliable the research is. This particular evidence holds only for gene association studies, but given the high correlation between IF and retractions, it likely holds for other research as well. Now what is the data? The paper contains one figure:

On the X-axis you see the impact factor and on the Y-axis a measure for the bias of the gene association data on a logarithmic scale. How did the authors calculate this bias score? In their own words:

We divided the individual study odds ratio (OR) by the pooled OR, to arrive at an estimate of the degree to which each individual study over- or underestimated the true effect size, as estimated in the corresponding meta-analysis. We have recently used this method to identify a biasing effect of research location and resources. (Munafo MR, Matheson IJ, Flint J. Mol Psychiatry 2007; 12: 454–461)

Thus, the authors plot a value that indicates by how much a single study over- or underestimates the actual effect (estimated by taking many studies into account) of a gene-phenotype association. The size of the circles on the graph indicates the sample size of the study. The overall "R squared" value, or the Coefficient of Determination for this correlation is only 0.13, but at a highly significant P=0.00002. This means that the correlation is pretty weak, but it is statistically significant.This sort of correlation, low R2 but statistically significant, corresponds roughly to the pattern of correlations one can see with citations: IF is predicting citations of a paper to some degree, but not very reliably. Likewise, IF is predictive of a gene association unreliability, but not very reliably. Or, phrased differently, IF is about as predictive of a paper's unreliability as it is about its citations, which I'd consider quite bad for something that decides about scientific careers.

But another important point can be seen in the graph: IF is also predictive of the sample size of the gene association study: the higher the IF of the journal in which the study was published, the lower the sample size. One could interpret this as evidence that high-IF journals are more likely to publish a large effect, even though it is only backed up by a small sample size, while low-IF journals require a more solid amount of data to back up the authors' claims.

Taken together, this study provides some evidence for one of the potential mechanisms underlying the very strong correlation between IF and retractions we've seen before: authors are more likely to publish unreliable data with predominantly overestimated effect sizes in high-IF journals. Importantly, this constitutes a mechanism which cannot be explained by high-IF journals being more closely scrutinized than low-IF journals. Instead, it suggests that at least a portion of the retractions in high-IF journals is due to the studies published there being more likely to be flawed than studies in low-IF journals.

In the words of the authors:

Our results indicate that genetic association studies published in journals with a high impact factor are more likely to provide an overestimate of the true effect size. This is likely to be in part due to the small sample sizes used and the correspondingly low statistical power that characterizes these studies. Initial reports of genetic association published in journals with a high impact factor should therefore be treated with particular caution. However, although we cannot necessarily generalize our findings to other research domains, there are no particular reasons to expect that genetic association studies are unique in this respect.

Munafò, M., Stothart, G., & Flint, J. (2009). Bias in genetic association studies and impact factor Molecular Psychiatry, 14 (2), 119-120 DOI: 10.1038/mp.2008.77

Posted on Monday 26 December 2011 - 23:26:04 comment: 0

{TAGS}

{TAGS}

You must be logged in to make comments on this site - please log in, or if you are not registered click here to signup

Render time: 0.0807 sec, 0.0051 of that for queries.